Taylor Davidson · Thank you, ChatGPT

Every month or so I get an email asking about what ecommerce platform or template I used for Foresight, and I think most people are surpised that the design is completely custom. I’ve built my own websites since 1998, but don’t consider myself a web developer, just a person who is good at using educational resoures to figure things out.

What I enjoy most about using AI tools like ChatGPT is that they expand the range of what I want to figure out. The simple viewpoint to AI tools is that they automate things that existing technology and people do, and thus replaces the things that exist today. I prefer to operate with a viewpoint towards expanding resources rather than allocating resources [1], and so I look at AI tools as expanding the range of things we can do. Not just faster or cheaper or incrementally better, but substantially additive to what exists today. And to be even more nuanced about it, it’s not just what we can do, but what we want to do. Yes, using AI technology enables me to do more things, but what’s most valuable to me is how the process of using it expands my ambitions for what I want to create.

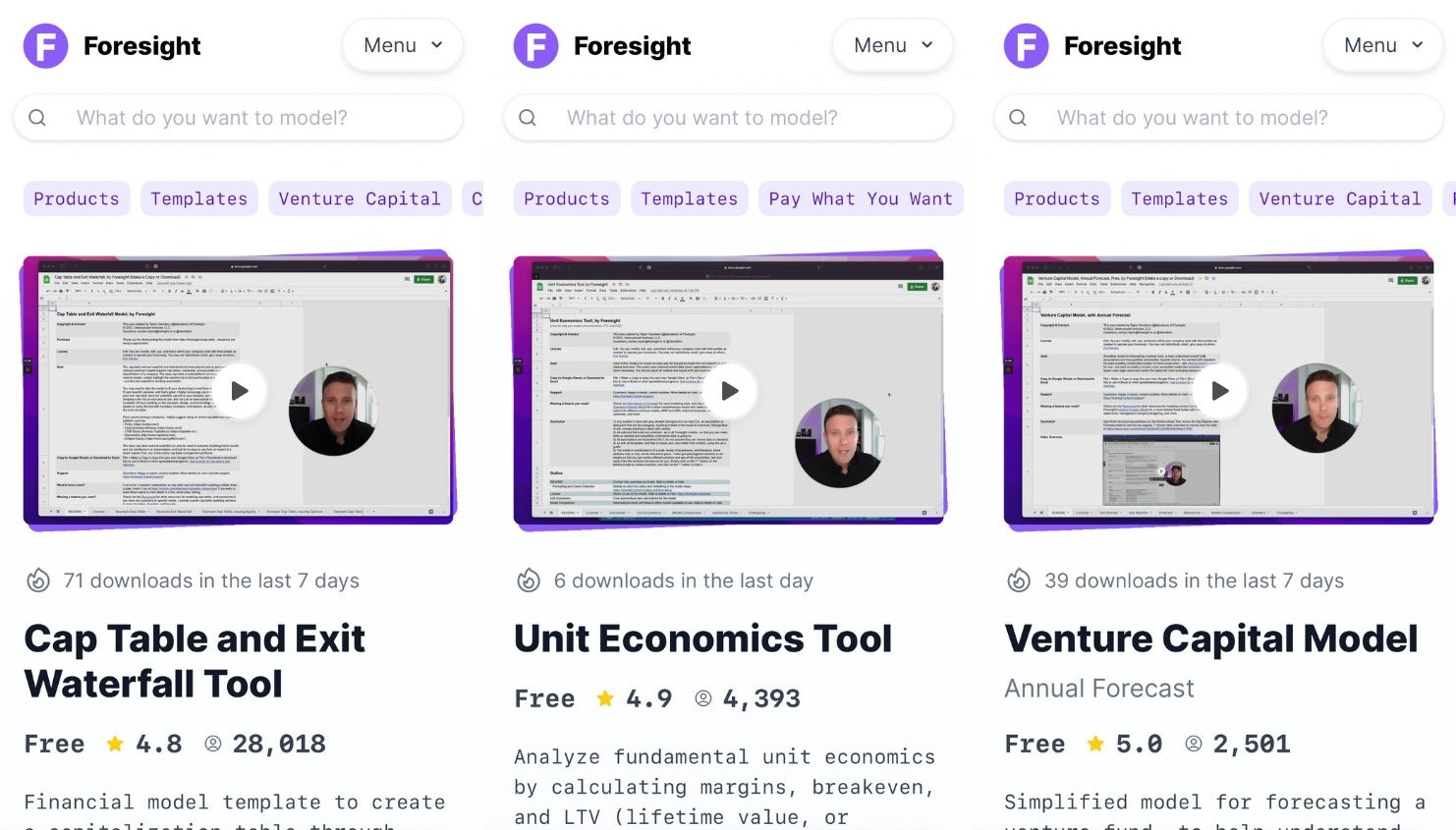

I have been working on refining Foresight’s website, and wanted to share one particular feature, the “N downloads in last Y days” information that I recently added to the product pages. It’s a copy of a feature I’ve seen on Nike’s website, but it took a bit of work to create it for my site because of some data limitations.

For years, I’ve manually updated the downloads and user counts on the website based on information I track in a spreadsheet, but recently I wrote a bit of code - with ChatGPT’s help, of course - to use Gumroad’s API to pull down the product details and download counts, and store them in a JSON file that I can then use on the site. Of course, because I have a bit of marketing debt from operating this for 10+ years, I have to do some transformations on the raw data to handle product deprecations and changes in product download strategies, as well as add in historical data from other product download platforms.

That was relatively easy to do, especially because I learned how to do API calls and use JSON data when I built Ninerakes. But creating the “N downloads in last X days” took a bit of work; since Gumroad’s data just reports the number of downloads at the time of the API pull, calculating the delta from a time in the past means I have to start storing the data from past downloads. So I updated the script to so that each time the script is run to pull data from the API, the code:

- Accesses data from the API, performs the necessary transformations, and stores the most recent data, which is used to display the current download counts on the site

- Store the data in a separate file by the UTC date

- Maintain 14 days of past days data

- Calculate the difference in download counts for each product for the past 1 through 7 days, and store these deltas for each number of days

- Evaluate the differences by calculating the ratio of downloads per day for each of 1 through 7 days

- Take the difference that is “best”, with a bit of custom logic around number of total downloads and the ratio of downloads per day, and store the results of what was selected as the best difference to show

- Store the selected number of downloads and number of days selected as the best difference

- Display that on the site, if the number of downloads matches a minimum threshold

- Use a Github action to automatically run the script just before the end of every day (on UTC time)

- Automatically redeploy the site to Netlify after the script runs, so that all the download counts are automatically updated.

Here’s the important note: you can’t just ask ChatGPT to build that. It takes a bit of prompt engineering, iterations, and learning to be able to build that, and each time I solved a problem in there it opened up new problems to solve.

A year ago I would have never been able to solve all of the problems and steps to create that, and I would not have paid a developer to build that specific code for a specific feature without knowing the ROI on the time necessary to do it. But now I was able to figure this out and implement in less than a day, to test and see if it works. And it’s likely that implementing this inspire increasingly complicated new features that I would not have envisioned if I had not gone through the process in learning how to do this. Certainly, the short-term optimization would have been to pay someone else to do this, but I believe that’s a local minima optimization instead of global maxima optimization. Time will tell.

Sometimes the worlds we live in do not allow for that viewpoint, but that’s a different story. ↩︎