Taylor Davidson · Why image recognition, voice interfaces and machine learning will change your homescreen

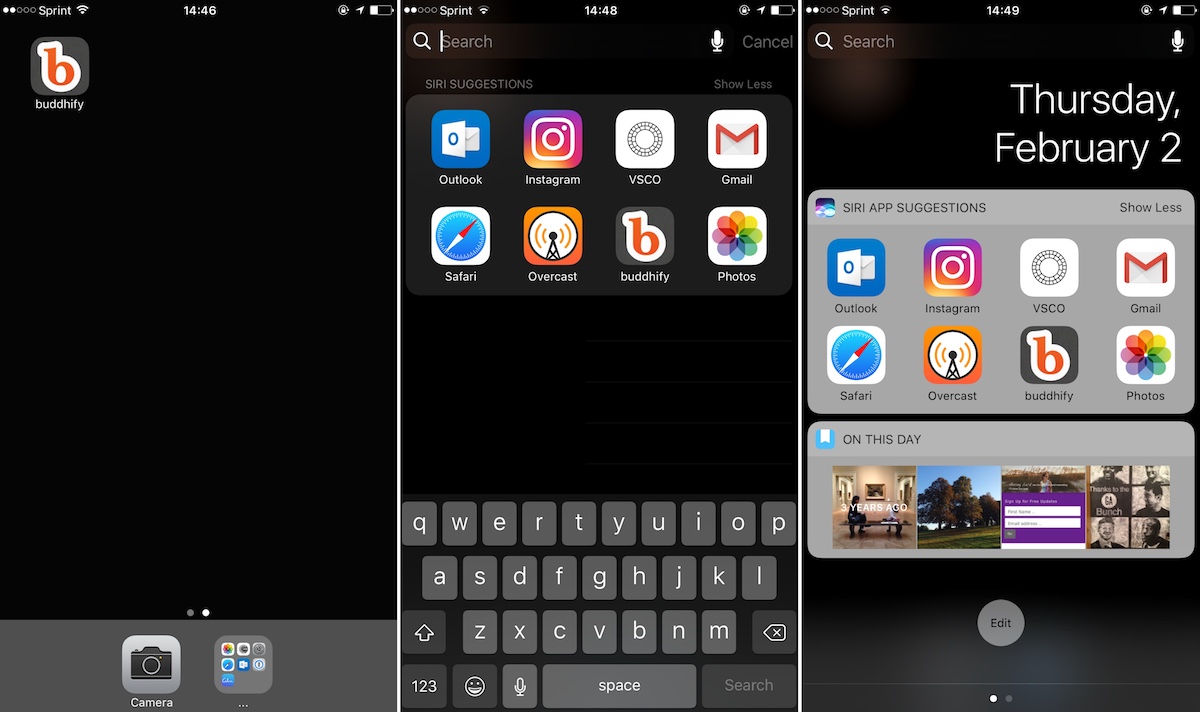

A year ago I reorganized my app layout on my phone’s homescreen and did something that might seem a bit drastic. I put almost every app into a single folder, and deposited that folder in the bottom drawer. And except for the first couple screens in the folder, the apps are organized essentially by the dominant color of their app logo, not by what they do. [1] I left a couple apps on the homescreen for aspirational reasons, to remind myself to use them daily, and over the last year I’ve changed these few apps on the homescreen to adjust what I wanted to emphasize in my daily life.

So… how do I use my phone? I either:

- Open that primary folder to use the apps on that first screen

- Search by pulling down and using the search field to find an app - or address, or contact, etc. - that I want

- Swipe right to the Notifications tab and use one of the Suggested Apps or one of the widgets

Instead of using my phone by picking apps from a carefully laid out homescreen, I largely use search and a “homescreen” that’s algorithmically created for me. That said, at the moment, the sad part to this switch is that it’s probably not the efficient choice - not yet, at least. The algorithmically created homescreen, i.e. “Siri Suggested Apps”, are currently (iOS 10) based simply on what apps I’ve used recently or my location, passing on using the deeper contextual data stored in my device and apps about where I am or what I might want to accomplish, limiting the true practicality of the approach.

But there’s no reason to believe that has to be the endstate. Improving the app suggestion algorithm to use additional contexts to figure out what I want to accomplish, and thus what app I likely want to use, could be the starting point to shifting how we use mobile devices, away from finding apps to accomplishing tasks. As simple as it sounds, it’s a profound shift, with billions of dollars in revenues hanging in the balance. The operating systems have the power to control how people use mobile, and they have the potential to shift how we accomplish things on mobile devices, by setting the rules, functionalities and technologies that enable themselves and third-party services to deliver products and services to people.

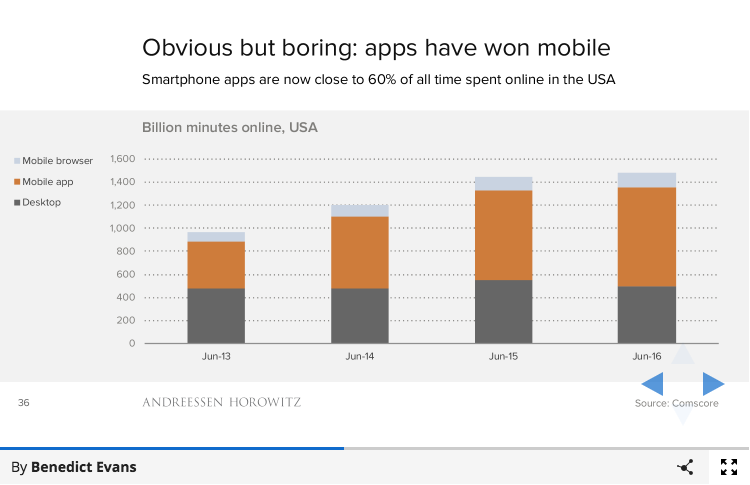

That’s why mobile is still interesting. Even though mobile has moved from the creation phase to the deployment phase [2], and everyone is more interested in what comes after mobile - the next platform of innovation that will create new innovations and fortunes - mobile still matters. [3] Machine learning, artificial intelligence, virtual reality, augmented reality, voice and image recognition, these new technologies and platforms that are creating new products, new possibilities, new fortunes, all of these will utilize mobile for deployment. And even though apps have won …

… they are under strategic pressure. Apple and Google have the strategic and business incentive to rewire how their mobile operating systems work to profit from the next wave of innovation in mobile, which will largely rest on deploying these new technologies of information capture and processing. The operating systems will control how information is captured and processed: how that information is deployed will be up to apps. Brands have been strategically powerful methods for companies to win on mobile so far, but they do not inoculate companies from the disruption of new user experiences. The winners of today need not be the winners of tomorrow.

Mobile today is dominated by the apps you know and love (and hate), but the operating systems are about to rewire how mobile is won.

Fast Company wrote an article in December 2015 called Why The Great App Unbundling Trend Is Already In Trouble, and in my interview with Fast Company, I attempt to explain why many single-use apps from major players aren’t performing especially well:

“Most unbundled apps aren’t built because people want them,” Davidson says. “They’re built because they’re driven by a corporate reason, and not a user-driven one.”

Davidson believes this is just the beginning. With the rise of extensions and deep linking, apps will become a lot better at talking to one another without being coded specifically to do so. That means another primary motivation for unbundling—-being able to pass users between multiple apps from the same company—will become less of a distinct advantage.

“Apple and Google . . . they’ve changed the rules of how people connect with apps, which means in many ways the core rationale for unbundling is less popular,” Davidson says.

The article was written shortly after Dropbox announced the sunsetting of Mailbox and Carousel, and of course, the day after the article posted, Evernote announced they were shutting down a number of their single-purpose apps, adding fuel to the fire. Around this time, this reignited the talk of the last couple years about app constellations, app unbundling, and the future of apps. But ever since then, that conversation has died off. Why?

Perhaps the issue lies in how we use mobile. How many new apps have you downloaded in the past month? Probably zero, if you’re like 65% of people.[4] Many people have called the app boom over [5], and while we may spend 85% of our time on mobile using an app, 84% of that is spent in just five apps. [6] While that “five apps” varies from person-to-person, and opportunities for new apps still exist - Snap isn’t one of the apps featured in most of these app use studies, but is now nearing 150 MM monthly users and an IPO - for the majority of people our use is fairly concentrated and we choose to spend our time in a small set of apps.

But still, why? Is it because we don’t have the mental time and space to discover new apps, or are inflexible or unable to to change our daily lives to use new apps, reflecting something fundamental about human behavior? Or does it reflect the broader concentration of time spent on the web, driven by the winners of the current stage of consumer technology? Or is it because the app discover process makes it too difficult to find new apps? Or perhaps he fundamental way that apps are accessed and used forces us down a funnel to use only a few apps each day?

I’ve heard and read all of these arguments, and I think all play a role in the app swoon. We’re past the point where introducing a new app that does an existing thing better is a way to win; no, if you want to win on mobile today you need to create a new behavior.

That’s what people often forget about MySpace, Twitter, Facebook, Instagram, Snap, Amazon: each succeeded because they popularized a new user behavior. Enabling and latching onto something fundamentally different for people to do - tweet, share with friends, make (and share) better photos, share temporary photos, shop online - is how you create a wedge into someone’s life and become one of those five apps.

That’s why everyone is worried about what comes next. Many of the winners on mobile today were originally built for a desktop-based internet (excluding the newest winner, Snap), but the computing model has shifted to mobile-only and mobile-native. [7] Mobile brings very different means to interact with the world - different sensors, screens, inputs and interfaces than the desktop paradigm - and as technology is giving us new abilities to see, structure and understand the world - powered by image recognition, machine learning, voice interaction, what mobile can do, how it’s being done, and how people can accomplish things are on the cusp of changing. It’s obvious why today’s winners are making investments in algorithmic intelligence, machine learning, voice and image recognition: keep up with a changing computing paradigm or drop out of that top five. The risk isn’t about someone creating a new social networking app: the risk is that someone leverages these new technologies to see, understand, and create from the world around us that creates a new behavior that they aren’t fundamentally able to do.

Ben Thompson discussed this in his Exponent podcast, pointing out how Snap enabled people to do something new - share photos that disappeared - and used that to ladder up to a more complete user and media experience. Facebook was not able to copy because their culture, product, and the very reason behind their existence was diametrically opposed to the idea behind Snap. The reason why we win is also the reason why we lose.

The funny thing behind much of what I wrote about in 2013 through 2014 about mobile - unbundling, deep linking, app extensions, is that very little of it has had any real impact. The premise was that the the most interesting thing in apps wasn’t about apps but about operating systems, and how iOS and Android had the potential to change how we use apps. But we haven’t seen either the operating systems or app developers push forward any fundamental shifts in how we use mobile. But I still believe that’s where the opportunity lies.

Apple and Google have the strategic incentive to rewire how their mobile devices interface with the world. Voice interfaces, image recognition, and machine learning are on the cusp of mass deployment in phones, cars, and homes, and with that, Apple and Google finally have the incentive to take advantage of these enabling technologies to enable app developers to build not just new apps, but new behaviors.

A step back: I was particularly enthused about app extensions in 2014, noting how they could enable a counter-trend to the push towards lightweight, unbundled apps, positing that “you could build heavy apps if you build light extensions”. But that never happened. The usage of app extensions has turned out to be light, and the risks high to developers that wanted to leverage the new opportunities. Third-party keyboards generated excitement when they first came out, but suffered in adoption and development as the discovery, installation and usage process proved to be too cumbersome for users. Photo editing extensions had minimal pickup, and have disappeared from the few photo apps that adopted them. Apple never committed to app extensions, never making other operating system changes necessary for them to thrive, and they largely disappeared until iOS 10 and iMessage app extensions.

Today, Apple and Google are under different pressures and face new opportunities. Each of these new interface and understanding technologies - voice interface, image recognition, and machine learning - could be built into the devices and operating systems to enable better products and services from themselves and third-party developers.

Both have taken small steps in this direction. Apple is bringing machine learning and algorithmic intelligence to the iPhone, focusing first on powering Siri, widgets, and their own apps:

If you’re an iPhone user, you’ve come across Apple’s AI, and not just in Siri’s improved acumen in figuring out what you ask of her. You see it when the phone identifies a caller who isn’t in your contact list (but did email you recently). Or when you swipe on your screen to get a shortlist of the apps that you are most likely to open next. Or when you get a reminder of an appointment that you never got around to putting into your calendar. Or when a map location pops up for the hotel you’ve reserved, before you type it in. Or when the phone points you to where you parked your car, even though you never asked it to. These are all techniques either made possible or greatly enhanced by Apple’s adoption of deep learning and neural nets.

Apple has taken strict measures to guard people’s privacy, potentially hampering their efforts in machine learning. [8] And these capabilities have not been offered to third-party developers. Google has taken a similar “us-first” strategy, notably with the launch of Google Assistant only for their Pixel phone, and not for their Android operating system [9]. From a product and technology perspective, this makes sense. Build the core interface and understanding technologies and work with your own teams to leverage them for your products. But strategically, at some point the pressure will come to open up and allow third-party developers to use them for their own apps. Apple and Google will not be able to solve all the problems that app developers work to solve today, and how they open up access to these new interface and understanding technologies will define how the new mobile computing method evolves.

That’s when our homescreens will change. That’s when our top five apps will change. Right now we’re just pushing pixels around on our screens, reorganizing how we get to the apps that help us accomplish things. But once mobile fully embraces voice interaction, image recognition, and machine learning, our behaviors will adapt, and our usage patterns and preferences will change. Better “suggested apps” screens will be a start, using more contextual data to guess at what I want to accomplish, but the longer-scale change will be something deeper.

There is no reason to believe that our current use of mobile is the endstate. Google Glass and Snap’s Spectacles were / are visions of alternate approaches to mobile where our use of technology isn’t defined by us looking down and typing on small devices. Apple’s AirPods and Watch are methods to leverage a mobile phone for content and communication, but without using the phone as the primary, direct access method. Bots got a lot of attention in 2016 as a hot new way to use the Internet, and while the examples of successful bots have been few, the reason isn’t because of the idea of conversational bots as an interface to information, it’s because the underlying technologies that enable bots to succeed - notably machine learning, voice interfaces, algorithmic intelligence - are raw themselves.

And of course the hot product of today - Amazon’s Alexa [10] - isn’t a phone, and it isn’t mobile. Google Home and other voice-operated “computers” will help shift to a new computing paradigm, and one has to believe that the lessons learned from these devices, and the technologies developed for them, will find their way into mobile devices.

The phone of today need not be the phone of tomorrow. The dominant method of using mobile today - looking down and typing on a bright, small screen - doesn’t need to be the future. That’s the potential of the technologies today, not to free us from mobile, but to redefine how we use it. And that’s an opportunity that none of today’s winners can pass up.

Yes, color coded. It’s amazing how similar the colors are of so many apps. ↩︎

Mobile is eating the world, Benedict Evans, December 2016 ↩︎

Perhaps not the venture capitalists and startups looking for venture-capital type returns, but mobile still matters to the billions of people that use mobile technologies and the companies looking to serve them. ↩︎

Most smartphone users download zero apps per month, Quartz, August 2014. Admittedly old data, but I couldn’t find a more recent study and the behavior still seems to hold. ↩︎

The app boom is over, Recode, June 2016. ↩︎

Consumers Spend 85% Of Time On Smartphones In Apps, But Only 5 Apps See Heavy Use, Techcrunch, June 2015. ↩︎

Mobile is eating the world, Benedict Evans, December 2016 ↩︎

It’s an issue open to debate, and there is a chance that Apple is shifting their position on machine learning and AI to being more open. So far, they have been proponents of the small data approach to big data analytics, but time will tell if that focus on privacy is one that helps with with consumers more than it potentially hurts them with product features. ↩︎

An interesting strategy, obviously aimed at differentiating the Pixel phone and driving demand for their own phone. But that limitation appears to be creaking open, as Assistant is now available on Google Home, Android TV, and their Allo chat app, and it’s possible (strategically, at least) to see it becoming available for certain wireless carriers carrying Android devices, although I don’t think there has been much of a sign of that yet. ↩︎

Voice Is the Next Big Platform, and Alexa Will Own It, Backchannel, Jessi Hempel, December 2016 ↩︎